-

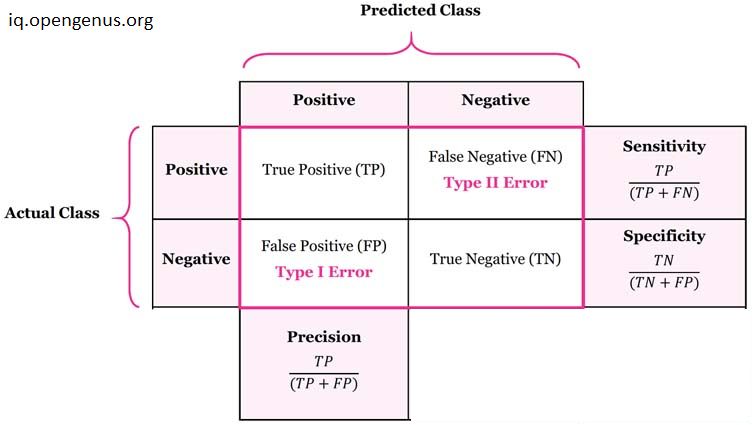

Confusion Matrix is the visual representation of the Actual vs Predicted values.

- It group and measures the performance of classification prediction model and looks like a table-like structure.

- This is how a Confusion Matrix of a binary classification problem looks like.

ss -

[!Caution]+ Actual Values by convention should be the columns where as prediction as the rows (just like example screenshot above)

-

Summary metrics can help simplify comparison of data analysis

- To better understand this, let’s take an example of Covid test (for say 100 people) to detect whether or not they have Covid

- Accuracy – how many correct predictions out of all predictions

- How many who tested as positive and do have Covid and plus how many who tested as negative and don’t have it, divided by total number of people

- \[Accuracy=\frac{TP+TN}{TP+FP+TN+FN}\]

- Sensitivity (Recall) – a subset of accuracy focusing on TP only

- how many correct (true) positive predictions out of all true positives

- How many who tested positive and do have Covid, divide by number of people who actually do have Covid

- \(Recall = \frac{TP}{TP+FN} = \frac{TP}{ActualPos}\) ^7bb12a

- Specificity – a subset of accuracy focusing on TN only ^4def3e

- how many who tested as negative and do not have Covid, divide by number of people who actually do not have Covid

- \(Specificity = \frac{TN}{TN + FP} = \frac{TN}{ActualNeg}\) ^547e31

- Precision – how many correct (true) positive predictions out of all predicted positives

- e.g. If we place a bet, how accurate is that bet. a subset of accuracy but for one category only (in the case of binary classification)

- \[Precision = \frac{TP}{TP+FP} = \frac{TP}{PredPos}\]

Review #flashcards/statistics

What does Sensitivity in Confusion Matrix represents? || It measures how many correct positives or true positive predictions out of all true positives. It means, “are you able to pickup all the positives?”

Describe the formula for Sensitivity? || $Recall = \frac{TP}{TP+FN}$

What does Precision in Confusion Matrix represents? || It measures how many correct positives or true positive out of all predicted positives. It means, “are your bets accurate enough for positive prediction”

Describe the formula for Precision? || $Precision = \frac{TP}{TP+FP}$

References

-

[Confusion Matrix What is Confusion Matrix Confusion Matrix for DS (analyticsvidhya.com)](https://www.analyticsvidhya.com/blog/2021/05/in-depth-understanding-of-confusion-matrix/) -

[Data Science in Medicine — Precision & Recall or Specificity & Sensitivity? by Alon Lekhtman Towards Data Science](https://towardsdatascience.com/should-i-look-at-precision-recall-or-specificity-sensitivity-3946158aace1) - Precision, Recall, Sensitivity and Specificity (opengenus.org)

Metadata

- topic:: 00 Statistics00 Statistics

#MOC / Hub for notes related to general statistical knowledge

- updated:: 2022-08-03 Private or Broken Links

The page you're looking for is either not available or private!

- reviewed:: 2022-08-03 Private or Broken Links

The page you're looking for is either not available or private!

- #LiteratureNote